Stanford Online’s Agentic AI webinar (2024) highlights the evolution of language models into “agentic” systems. In this blog-style handout, we break down the lecture’s technical and conceptual material into accessible sections. The goal is to preserve the talk’s depth while making it easy to follow. We’ll cover core concepts, agent architectures, examples, frameworks, and key takeaways, all based exclusively on the content of the Stanford 2024 lecture:

TL;DR

LLMs form the foundation, but augmenting them with tools, memory, and iterative logic turns them from passive predictors to active problem-solvers.

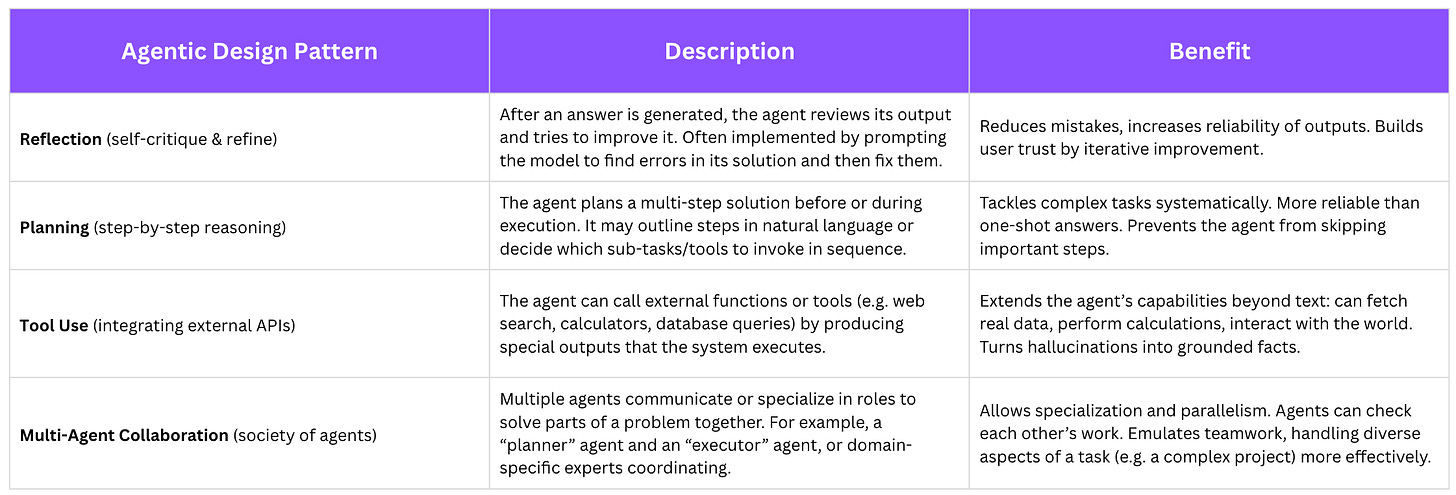

Core agentic patterns (Planning, Reflection, Tool Use, Multi-agent) provide reusable solutions for building these advanced AI systems. They address the key limitations of LMs (hallucination, knowledge gaps, single-turn limits) and are already demonstrating their value.

Agentic AI has broad applications – from writing code and drafting reports to automating customer support and beyond. Early adopters in industry are seeing that these AI agents can learn, adapt, and scale with far less human micromanagement, potentially leading to huge efficiency gains.

Nonetheless, building agents responsibly is paramount. Developers must incorporate safeguards, thorough testing, and ethical guidelines to ensure these systems truly help rather than harm. Strategies like human oversight, transparency of AI decisions, and rigorous evaluation become even more important as autonomy increases.

The field is moving quickly. The lecture encouraged continuing to learn and experiment – the “progression of LM usage” is ongoing, and staying at the cutting edge (via courses, research literature, and hands-on projects) will be crucial for anyone looking to innovate with AI agents.

Introduction

What are AI agents? In the context of this Stanford lecture, agentic AI refers to AI systems (often built on large language models, LLMs) that don’t just generate text, but can perceive, decide, act, and learn in an autonomous loop. Traditional LLMs are static text predictors – you give a prompt, they give a completion. Agentic AI is about making these models dynamic problem-solvers that interact with tools, remember context, plan actions, and iteratively improve. The lecture emphasizes that this shift from static to dynamic AI is revolutionary, enabling new applications and greater reliability.

Speaker: Insop Song (Principal ML Researcher at GitHub Next) led the webinar, drawing on experience applying LLMs to coding and productivity tools. The session’s structure followed a logical progression:

A quick overview of LLMs – how they work, are trained, and are used.

The limitations of using LLMs in isolation.

Techniques to overcome these limitations: e.g. Retrieval Augmented Generation (RAG) and tool use.

The definition of agentic LMs and key agentic usage patterns: reflection, planning, tool use, iterative prompting, etc.

Design patterns and a real-world example (a customer support agent) tying everything together.

Discussion of applications, best practices, and ethical considerations for deploying agentic AI.

Let’s dive into each of these sections in detail.

Language Models 101: How LLMs Are Trained and Used

Modern language models (like GPT-4, etc.) are the foundation of agentic AI, so the lecture begins with how LLMs work and how they’re optimized for tasks:

Next-Word Prediction: At their core, LLMs are trained to predict the next word given prior text. If you feed in some input text, the model generates continuation word-by-word. This simple mechanism underlies their ability to produce coherent sentences and paragraphs.

Training in Two Stages: Large LMs undergo a two-part training process:

Pre-training: The model learns from a massive text corpus (internet pages, books, etc.) by playing the “fill in the blank” game – predict the next token for each sequence. This gives it broad world knowledge and fluency. After pre-training, the LM is a general predictor of text but not yet aligned to follow user instructions directly.

Post-training: This makes the model actually useful and safe for human-facing tasks. Post-training often includes:

Supervised Fine-Tuning (SFT) on instruction-following data. Here, the model is trained on example prompts with desired responses (e.g. question → answer). This teaches it to follow instructions or questions and respond helpfully, in a specific style.

Reinforcement Learning from Human Feedback (RLHF). The model’s behavior is further refined by human preferences – for example, humans rate outputs, and the model is optimized to prefer outputs that humans find good. RLHF aligns the AI with human values and reduces toxic or nonsensical outputs.

Example – Instruction Template: The lecture showed a typical format of instruction tuning data. It looks like:

### Instruction:

{prompt or task description}

### Input:

{optional additional context}

### Response:

{desired model output}

This structure (used in many open-source instruction-tuned LMs) teaches the model to expect a user instruction and produce a helpful response accordingly.

After Training – Capabilities: A well-trained LM (pre-trained + fine-tuned) can now generate relevant text given an instruction, leveraging the vast knowledge it absorbed. The lecture noted that such models have a lot of world knowledge and language understanding, enabling applications like Q&A, summarization, code generation, etc.

Applications of LMs: Before diving into agents, the talk highlighted examples of current LM-powered applications:

AI coding assistants (e.g. GitHub Copilot) that suggest code.

Domain-specific copilots (for law, medicine, etc.) that help in specialized tasks.

Chatbots and conversational interfaces (like ChatGPT) for general Q&A and support.

Insight: These applications show how LLMs can act as intelligent assistants in various domains. However, out-of-the-box LLMs still have constraints – they operate in a stateless, one-turn fashion, and can’t access new information or perform actions by themselves.

Accessing LMs (APIs vs Local): To use LMs in your projects, you typically call a cloud API or, for smaller models, run one locally. The lecture briefly mentioned deployment options:

Cloud providers (OpenAI, Anthropic, Cohere, etc. via APIs).

Local deployment of smaller open-source models, if needed for privacy or offline use.

Either way, the interaction pattern is similar: you send a text prompt to the model and get back a generated text completion.

Working with LLMs via APIs: The talk outlined basic steps to integrate an LM using an API:

Prepare your prompt (programmatically include user query and any context/instructions).

Make the API call to the model service.

Receive the model’s response text.

Post-process the response if needed, and possibly chain it into further prompts or tool calls.

This covers how language models function and are used at a high level. Next, the lecture moved to prompt engineering best practices – because how you ask the model can make a big difference in quality.

Prompt Engineering: Crafting Effective Prompts

Even with a fine-tuned model, you often need to give clear prompts to get good results. The lecturer stressed that how you prepare inputs is critical. Key strategies for prompting were given:

Write clear, descriptive instructions. Don’t assume the model knows exactly what you want – spell it out. Provide as much detail and specificity as possible about the task. The less the model has to guess, the more likely the answer will hit the mark.

Include relevant context. If the question depends on some background or data, include that in the prompt. You can provide static context (e.g. a reference passage) or dynamic context (like retrieved info – more on that later).

Give examples (Few-shot prompting). If feasible, show the model one or a few examples of the desired input-output behavior. This is known as few-shot prompting. For instance, before your actual query, you might show: “Q: [example question] → A: [ideal answer]”. This helps guide the model’s style and approach by demonstration.

Enable chain-of-thought (CoT) reasoning. This is a powerful prompting technique where you encourage the model to think step-by-step. For instance, you can prompt: “Let’s think step by step…” or instruct the model to first produce an outline or reasoning before the final answer. By giving the model “time to think,” you often get more coherent, correct solutions for complex problems.

Break down complex tasks. If the task is complicated, consider splitting it into smaller prompts or stages (this is sometimes called prompt chaining). For example, first ask the model to extract key info from a text, then in a second prompt ask it to analyze that info. Decomposing tasks can greatly improve performance.

Iterate and refine prompts. The lecture notes that prompt design is an iterative process. It’s useful to trace what prompts lead to good or bad outcomes and refine accordingly. Over time, you curate better prompts or even use automated prompt optimization.

Example – Better vs. Worse Prompts: The speaker illustrated how a vague prompt can produce subpar results, whereas a clarified prompt yields much better answers:

Worse: “How do I add numbers in Excel?” – Too ambiguous. The model might give a generic answer.

Better: “How do I add up a row of dollar amounts in Excel? I want to do this automatically for a whole sheet of rows, with all the totals in a ‘Total’ column.” – This includes specific context (“dollar amounts”, entire sheet, where to put totals), so the model can give a precise solution.

Similarly:

Worse: “Who’s the president?” (of which country? which year?)

Better: “Who was the president of Mexico in 2021, and how frequently are elections held?” – Specifies country and year, plus asks a related second fact, focusing the query.

The pattern is clear: be specific, provide details, and guide the model as much as possible. Good prompting is still an essential skill even as we move to more agentic uses of LMs.

However, even with great prompts, base LLMs have significant limitations. The lecture next highlighted the common shortcomings of LMs used alone, motivating the shift to agentic AI.

Limitations of Standard LLMs

LLMs are powerful, but if you’ve used them, you know they can mess up in predictable ways. The Stanford talk enumerated some key limitations of language models in standalone usage:

Hallucinations (Misinformation): LLMs sometimes produce confident-sounding but false information. They have no built-in truth gauge – if a fact wasn’t in training data or isn’t recalled correctly, the model might just improvise. This is dangerous for any application needing accuracy.

Knowledge Cut-off: Models only know about data up to their training cutoff date. They lack awareness of any events or facts after that. For example, an LM trained on data up to 2021 won’t know about 2022–2025 happenings. On static prompts, you can’t ask it for real-time information (stock prices, today’s weather, etc.) unless it’s been somehow updated.

Limited Context Window: There’s a limit to how much text you can feed into the model at once (maybe a few thousand tokens). This means the model can’t truly remember unlimited conversation history or large documents unless you manage that externally. Long conversations or large documents risk the model forgetting earlier details.

No Internet or Tools Access: By default, an LLM in isolation can’t perform external actions – it can’t browse the web, use a calculator, or call an API. It’s constrained to its training knowledge and textual output. This means it can’t look up fresh information or interact with other systems without help.

One-Shot Reasoning: In a single prompt-response, the model might not do complex reasoning reliably. It often answers in one go, which can lead to errors on multi-step problems (math, logical puzzles, code execution) because it doesn’t get a chance to check or revise intermediate steps in the basic usage pattern.

No Self-critique or Memory of Mistakes: If the model makes an error, it won’t know it by itself in a single-turn interaction. There’s no innate mechanism for it to reflect on or correct its answer after producing it.

These challenges set the stage for why we need agentic approaches. The lecture’s core was about overcoming these limitations by extending LLMs with new patterns and system designs.

Below, we’ll discuss several enhancements: giving LMs tools and knowledge (to address hallucinations and knowledge gaps), and enabling them to operate in iterative loops with planning and reflection (to handle complex tasks and reduce errors). Each is a step in the “progression of LM usage” that transforms a static LM into an agent.

Retrieval-Augmented Generation (RAG): Giving LMs Knowledge

One major technique covered is Retrieval-Augmented Generation (RAG) – essentially, connecting an LM to an external knowledge source. This addresses hallucinations and outdated knowledge by grounding the model’s input in relevant facts.

What is RAG? It’s a framework where, before the model produces an answer, the system retrieves documents or data relevant to the query and provides them to the model as additional context. For example, if the user asks a question about some niche topic or a recent event, the system can fetch an excerpt from Wikipedia or a knowledge base, and prepend it to the model’s prompt. The model then uses that text to generate a factual answer (ideally with less hallucination).

How it works: Typically, RAG involves a vector database or search index:

Convert the user’s query into an embedding (a numerical representation).

Use this vector to search a document corpus for passages that are semantically related (or use a traditional keyword search).

Retrieve the top relevant text snippets.

Construct a prompt that includes the retrieved texts (often with an instruction like “use the following information to answer…”).

The LM generates the answer, presumably using the provided snippets to ground its response.

Benefit – Factual grounding: By giving the LM real reference text, you turn hallucinations into accuracy. The model is no longer relying purely on its trained memory; it has authoritative information at hand. Insop Song stressed that external knowledge isn’t just a neat add-on, but a “lifeline” for LMs to stay factual.

Current Examples: Many QA systems and chatbots use RAG. For instance, Bing Chat and Bard will fetch live web results to answer questions about current events. Enterprise chatbots use company documents as a retrieval corpus so the answers are grounded in up-to-date internal data.

Limitation: RAG requires a curated knowledge source and the retrieval step has to work well. If nothing relevant is retrieved, or if the snippet is misleading, the model’s answer may still be wrong. It also adds complexity (maintaining a document index). But overall, RAG greatly expands an agent’s knowledge beyond its frozen training data.

The Stanford lecture included RAG as a key method for agentic systems, since an agent should be able to “consult resources” like a human researcher would. By integrating search or database lookup tools, we enable our AI agent to stay informed and correct.

Tool Use and APIs: Letting LMs Act

Beyond just fetching knowledge, agentic AI involves letting the model execute tools or functions. The talk highlighted tool use as a cornerstone: LMs can be augmented with the ability to call external APIs, run code, or perform actions based on their output.

Why tool use? Some tasks are impossible or unreliable for an LM to do in its head, e.g. complex calculations, interacting with a website, or controlling a robot. By giving the model a way to invoke tools, we convert it from a passive text generator to an active problem-solver. As one summary put it, “tool usage turns an AI from a static predictor into a dynamic doer” – it can now fetch real-time info, trigger actions, or use specialist functions (like a math solver).

How do LMs use tools? Typically via an API-calling format. There are a few patterns:

Special formatting in output: The model is trained or prompted to output a special syntax when it wants to use a tool. For example, the ReAct framework and others have the model produce an “action” like

<tool_name>[parameters]which the system intercepts, executes, and then returns the result to the model. The model then continues, now with the tool result in context.Function calling interface: Newer API features (e.g. OpenAI function calling) allow you to define functions (like

search(query)orcalculate(expression)). The model can then output a JSON calling one of these functions. The system executes it and returns the result, which the model uses to finalize its answer.

Essentially, the LM and the system interact in a loop:

Model decides it needs a tool and outputs an action.

System executes the action and gives the outcome back.

Model incorporates the outcome into its reasoning or answer.

Examples from the lecture:

Web search: User asks, “What’s the best cafe based on user reviews?” The LM outputs a tool call:

{tool: "web-search", query: "cafe reviews"}. The system does a web search, returns results (perhaps a summary of top reviews). The model then uses those results to answer with a recommendation.Math calculation: User asks, “If I invest $100 at 7% compound interest for 12 years, what will I have at the end?” This requires actual calculation. The LM outputs something like

{tool: "python", code: "..."}– for instance, code to compute the compound interest. The system runs the code (e.g. in a sandboxed interpreter) and returns the numeric result, which the model can then put into a nice sentence answer.

These illustrate that with tools, the agent can reach beyond its own limitations – whether it’s browsing for data or doing precise math, it’s no longer stuck with just what it memorized or approximated.

Safety and Constraints: The lecture likely noted that tool use should be carefully managed. You typically restrict what tools the agent can access (for example, only a calculator or a company database, not arbitrary shell commands) to prevent unwanted actions. Nonetheless, this ability to act is powerful.

In summary, tool integration is a defining feature of agentic AI. An agent can not only think but also act on the world (in limited ways we permit). This dramatically widens the range of tasks: an AI agent could, for instance, read and answer emails, execute trades (with oversight), or control IoT devices – given the appropriate tool APIs. As Insop Song emphasized, LMs with tools become dynamic, able to retrieve real data or perform operations rather than guessing.

Having covered knowledge retrieval and tools, we move to the next layer: enabling the model to reason and operate in multi-step workflows rather than single prompt-response. This is where planning and iterative reasoning come in.

Agentic Thinking: Planning and Iterative Reasoning

A key part of the lecture was the concept of allowing the model to handle multi-step tasks through planning and reflection. Instead of asking the model a question and accepting the first answer, agentic AI has the model engage in a process: plan what to do, try steps, reflect on results, and possibly revise. Two major patterns here are Planning and Reflection.

Planning for Multi-Step Tasks

Humans break down complex problems into sub-tasks; agentic AI can do the same. Planning means the agent formulates a strategy or sequence of steps to reach a goal.

What planning looks like: In practice, the model might first output a plan (a list of steps) before executing them. For example, if asked to write an essay, an agentic approach might be:

Plan: “Step 1: Outline key points. Step 2: For each point, gather details... Step 3: Draft paragraphs. Step 4: Proofread.”

Then the agent would follow these steps one by one (possibly using tools or calling the LM for each part).

In code, you might prompt the model: “List the steps to solve this problem before proceeding.”

Reliability through planning: As Insop Song noted, a model that **breaks a task into steps is far more reliable than one that “guesses” in one go. Planning helps ensure it doesn’t skip important parts and can handle complexity piecewise. For example, when debugging code, an agent that plans “read error -> identify cause -> propose fix -> apply fix” will likely perform better than one that tries to fix code in one jump.

Hierarchical planning: The talk didn’t go deep into this, but an agent could even do high-level and low-level planning (like a hierarchical planner). High-level plan: what subtasks to do; then for each subtask, generate a detailed plan or directly solve it. This mimics how we solve big projects by breaking them down.

Coordination with tools: Planning can incorporate deciding which tool to use when. E.g., an agent might plan: “Step 1: use search tool for latest data; Step 2: analyze data with LLM; Step 3: draft answer; Step 4: use calculator tool to verify numbers; Step 5: finalize answer.”

The lecture positioned planning as a core design pattern: “Break tasks into steps.” This makes the agent more systematic and less prone to errors of omission.

Reflection and Self-Improvement

Even with planning and tools, an agent might produce a flawed solution on the first attempt. Reflection is the pattern where the agent examines its own output or intermediate work, and then improves it.

How reflection works: After the model gives an answer or a draft, you prompt it to critique and refine that answer. For instance:

Initial attempt: The agent answers a question or produces some output.

Reflect prompt: “Critique the above output. Where might it be incorrect or improved?”

The model then identifies potential errors or improvements in its answer.

Refinement: You then prompt, “Given that feedback, please revise your answer.” The model generates a corrected answer.

This can be done in a loop (hence terms like iterative refinement or self-healing prompts).

Example from lecture (code refinement): Suppose the task is to improve a piece of code. A reflection approach would be:

Show the code to the model and ask for a constructive critique or list of issues.

Then feed the code plus the model’s own feedback back, and ask it to rewrite the code addressing the feedback.

This could repeat for multiple iterations: each time, the model reviews its last output, catches more issues, and refines further.

This approach can converge on a much better solution than a single-shot attempt. Essentially, the model is using itself as a check.

Why it helps: Reflection acts like a simulated reviewer or test. It reduces errors because the model gets a chance to correct itself. The talk noted that iterative reflection builds user trust, since the final result is more polished. It’s akin to an author proofreading and editing a draft multiple times.

Quick to implement: Interestingly, reflection doesn’t necessarily require new training; it can often be done with clever prompting (though specialized techniques exist). The lecture described reflection as a “pattern that’s quick to implement and leads to good performance”. Simply adding a “let’s double-check the answer” step can catch mistakes.

Combining planning and reflection yields a powerful loop: the agent plans a solution path, executes steps (using tools as needed), then checks its work and iterates. This is essentially how humans tackle complex tasks! It addresses the limitation of one-shot reasoning by introducing a feedback cycle.

Multi-Agent Collaboration

While not always necessary, the lecture also mentioned multi-agent collaboration as an advanced pattern. This means using multiple AI agents (or multiple specialized LMs) that can split work and converse with each other to solve a problem.

Why multiple agents? Sometimes dividing roles can help. For example, you could have a “Planner” agent and an “Executor” agent: one generates a plan, the other follows it. Or have agents with different expertise (one is good at math, another at writing) work together on a task.

Smart home example: The webinar gave a scenario of designing a smart home with multiple specialized agents:

Climate Control Agent – adjusts temperature/humidity.

Lighting Agent – controls lights.

Security Agent – monitors cameras and alarms.

Energy Agent – optimizes energy usage.

Entertainment Agent – manages TVs, music, etc.

Orchestration Agent – coordinates all others to work in harmony.

This is a conceptual example where each sub-agent handles a domain, and a top-agent coordinates. It shows how complex systems might be managed by a team of agents rather than a single monolith.

Collaboration via communication: Multi-agent systems often communicate in natural language. One agent can output a message that another agent reads as input. They can debate or critique each other’s ideas. This echoes the idea of “two heads are better than one” – agents can catch each other’s mistakes or inspire better solutions.

Use cases: Multi-agent setups are being explored for things like code generation (e.g. one agent writes code, another tests it), research assistance (brainstorming between “analyst” and “critic” agents), and any scenario where dividing a complex job into roles is intuitive.

The Stanford lecture treated multi-agent collaboration as one of the core design patterns for agentic AI. However, it’s worth noting this can add overhead and complexity – sometimes a well-designed single agent with tools is enough. But in principle, collaboration can enhance robustness and parallelism.

The Agentic Loop

Bringing it all together, an agentic AI system might do the following for a given task:

Planning Phase: Decide a plan of action (possibly using a special “planning prompt” or a dedicated planning agent).

Execution Phase: Carry out the steps. This could involve multiple prompt-tool cycles:

Use retrieval to get needed info (RAG).

Use tools/APIs to get external results (calculations, etc.).

Use the LM itself for reasoning or intermediate subtask results.

Reflection Phase: At some checkpoints or at the end, review the output:

If something seems off, revise the plan or fix the output (go back to execution step).

Possibly have a second agent review the first agent’s work for quality.

Finalization: Present the final answer or action once it has been checked or refined.

This loop can repeat until a certain criterion is met (answer is good or time is up). Importantly, agentic systems often maintain a memory of the dialogue or previous steps. They carry forward the context so that with each iteration, they “remember” what’s been done and learned. This addresses the limitation of the short context window by explicitly managing state (e.g., keeping a summary of important facts or using a long-term memory store).

The result is an AI that approaches how a human might solve problems: gather info, use tools, break down the task, execute step by step, double-check the work, and possibly collaborate with others. It’s no surprise the lecture’s message was that agentic AI is the next big paradigm, bridging the gap between what AI can theoretically do and how it can be applied in the real world.

We’ll now see a concrete example given in the lecture, which ties many of these pieces together.

Example: Building a Customer Support AI Agent

As a practical illustration, the lecture walked through a customer support AI agent example (as mentioned in the video and summaries). This example demonstrates how the concepts – retrieval, tools, planning, etc. – come together in a realistic scenario.

Scenario: Imagine an AI agent that can handle customer support queries for a company’s products. It should answer questions, resolve issues, and know when to hand off to a human.

Key components an agent like this would need:

Knowledge Base Access (RAG): It should retrieve relevant FAQs, manuals, or past ticket resolutions from the company’s knowledge base to get accurate information. For instance, if a customer asks “How do I reset my device?”, the agent searches the help docs for “reset device” steps and uses that text in its reply.

Customer Data Tools: It might use APIs to pull the customer’s account or order info. E.g., Tool:

getOrderStatus(customer_id)if someone asks about an order, orlookupWarranty(product_serial)if it needs to check coverage. This ensures responses are personalized and correct for that specific user.Interactive Planning: The agent may have to gather information from the user in a logical sequence (like a human agent would). It plans a dialog flow: greet -> ask for account ID -> ask for issue details -> provide solution or troubleshoot step-by-step. This involves planning the conversation and remembering the user’s answers (context carrying) across turns.

Reflection & Handoff: The agent should recognize if it’s unable to solve the issue (perhaps through a reflection step analyzing its own answers). If it’s stuck or the user is unhappy, the plan might include: “Step X: escalate to human support”. This is an important safety/ethical feature: the agent knows its limits.

How the agent operates: Suppose a user says, “My gadget isn’t turning on, what should I do?” An agentic support bot might do the following:

Retrieve Knowledge: Find any “device won’t turn on” troubleshooting guides (via RAG it finds a relevant support article).

Plan Dialogue: Decide to ask a clarifying question – maybe “Can I know which model you have?” because the steps might differ by model.

User responds with model, agent then maybe uses a tool to check if that device is registered or under warranty.

Troubleshooting: Agent uses the knowledge base info to guide the user: “Okay, let’s try a few steps: First, hold the power button for 10 seconds… Did it light up?” It might go step by step, adjusting based on the user’s replies (this adaptability is a form of reactive planning).

Reflection: After providing a solution, agent may double-check if it covered all common fixes (“Did I address all possible causes?”). If not solved, it might loop through another set of steps or say “I’m going to transfer you to a specialist.”

Outcome: Either the issue is resolved with agent guidance, or it hands over to a human agent with a summary of what was tried.

This example from the lecture encapsulates why agentic AI is useful: a support chatbot that simply answered from a knowledge base might give a generic response, but an agentic support bot can interact, ask questions, use company data, and take actions (like creating a support ticket or scheduling a repair via API). It’s more autonomous and helpful, functioning almost like a real support rep.

Real-world deployment of such an agent would need careful testing (you don’t want it to, say, order a replacement device incorrectly due to a hallucinated tool use!). The lecture likely pointed out design patterns that ensure reliability, which leads us to the next section.

Key Design Patterns for Agentic AI Systems

Toward the end, the lecture summarized several design patterns – basically best practices or common architectures – for building agentic AI. These patterns encapsulate what we’ve discussed:

Reflection Pattern: Allow the model to iteratively critique and improve its outputs. Useful for tasks where accuracy is crucial (code, math, etc.) or where an initial draft can be refined (writing tasks). Advantage: reduces errors and instills more confidence in the result by catching mistakes through self-review.

Tool Use Pattern: Integrate external tools and function calls into the workflow. Essential whenever the task goes beyond text generation: accessing databases, performing calculations, fetching real-time info, etc. Advantage: extends the capabilities of the agent (no more knowledge cut-off, far fewer hallucinations due to data grounding).

Planning Pattern: Have the agent explicitly plan its steps or reasoning path. Especially helpful for complex, multi-part problems. Advantage: yields more structured and reliable problem solving. It prevents the agent from rushing to an answer and improves handling of long tasks by focusing on one sub-task at a time.

Multi-Agent (Collaboration) Pattern: Use multiple agents for different roles or have them converse to solve a problem. This could be as simple as two agents (e.g., a “Solver” and a “Checker”) or a whole team as in the smart home example. Advantage: can improve robustness (agents double-check each other) and allow specialization. One agent can compensate for another’s weaknesses, similar to how a team of experts might work together.

The table below (based on the lecture content) summarizes these design patterns and what they bring to the table:

These patterns are not mutually exclusive – they are often combined in a single agent system. For instance, an agent might plan its approach, use tools during execution, reflect on the result, and even spin off a helper agent if needed.

The Stanford lecture underscored that by embracing these patterns, we can push the boundaries of what AI can achieve. They are essentially the design principles for anyone looking to build advanced AI agents in practice.

Applications and Impact of Agentic AI

Throughout the talk, various applications of agentic AI were mentioned, painting a picture of how this approach can transform industries:

Software Development: Agentic AI can act as a coding assistant that not only suggests code, but writes, tests, and debugs it autonomously. For example, an agent could generate code, run it in a sandbox, notice (via a unit test tool) that it failed, then fix the code – all in a loop. This could shorten development cycles significantly “by weeks”, as one quote highlighted. Early versions of this are seen in tools like GitHub Copilot Labs (code explanation and transformation) and experimental AutoGPT coding agents.

Research and Analysis: Instead of a human analyst gathering information, an AI agent can retrieve the latest data (using web or database tools), aggregate it, and even write reports. For instance, a financial analysis agent might pull recent stock prices, news headlines, perform calculations, then output a summary report. Because it can use tools, it stays updated; because it can plan, it can ensure to cover all required analysis points.

Customer Service & Operations: As we saw with the support bot example, agentic AI can handle many routine customer inquiries with minimal supervision. Beyond customer support, think of agents scheduling meetings (they’d check calendars, book slots), managing emails, or even coordinating workflows across departments by acting as an autonomous assistant.

Healthcare and Finance (cautiously): These critical fields require accuracy and compliance, but agentic AI could assist professionals. E.g., a medical agent might gather patient history, draft clinical notes, or cross-check guidelines – always with a human final say. A finance agent might monitor transactions and flag anomalies, or automatically fill out forms. Because agentic AI can learn from interactions and adapt, it might reduce human workload in these precision-driven domains. (Of course, ethical and regulatory hurdles mean AI wouldn’t be fully autonomous here soon, but as a copilot it’s valuable.)

Personal Assistants: On the user end, an agentic AI could effectively become a true digital assistant – not just answering questions, but performing tasks for you. “Book my flight next Tuesday” could prompt the agent to use a flight search API, find options, and actually make a reservation on your behalf (after confirming with you). Some early signs of this are AI integration in smart home automation, as hinted by the multi-agent home example.

The lecture stressed that this isn’t just theoretical – it’s already in motion. Companies are rapidly adding agentic features: Microsoft’s Copilot vision, for example, where future MS Office might have an agent that can take a goal (“summarize these sales and draft an email to the team”) and execute multi-step actions to achieve it.

Adopting agentic AI can bring scalability and adaptability. Unlike traditional software that needs every scenario hard-coded, an AI agent can learn from each interaction and get better (in some cases retraining or simply accumulating knowledge). This reduces the need for constant human oversight on repetitive tasks and allows handling a wide variety of requests. As Insop Song put it, agentic models “adapt, iterate, and scale — just like human teams”, which could be the future of many enterprise workflows.

Before concluding, the talk also touched on some important considerations when deploying agentic AI. We turn to those next.

Ethical and Practical Considerations

Empowering AI with more autonomy and capability also raises ethical and safety considerations. The lecture made sure to address these (especially during Q&A):

Accuracy and Hallucination Risks: Even with RAG and tools, AI agents can make mistakes. In critical applications (health, finance, legal advice), an unchecked AI could do real harm by acting on false information. Mitigation: always have a human in the loop for high-stakes decisions. Use reflection and evaluation tests liberally. For example, require human approval for certain agent actions (like transferring money or prescribing something).

Tool Misuse and Safety: Giving an agent tool access is powerful but can be dangerous if not sandboxed. Imagine an agent with unrestricted system access – it could delete files or leak data if it malfunctions or is prompted maliciously. The advice is to whitelist safe tools and put limits (e.g., if an agent is allowed to execute code, run it in a secure sandbox with resource limits). Also audit the outputs: ensure the agent’s tool requests are reasonable (no agent should be executing

rm -rf *!). This is an active area of research (making sure agents follow policies and don’t go rogue).Bias and Fairness: LLMs can inherit biases from training data. An autonomous agent might inadvertently produce discriminatory outputs or decisions if not carefully checked. It’s crucial to test agents for biased behavior and use techniques like RLHF to align them with human values and fairness criteria. In customer support, for example, the agent must treat all users fairly and respectfully – requiring robust training and maybe rule-based overrides for inappropriate content.

Privacy: Agents that use internal data or browse the web must handle sensitive information carefully. For instance, if an agent retrieves customer data, we need to ensure it doesn’t inadvertently expose it. Companies deploying such agents should enforce data privacy guidelines (the agent should not divulge private info to unauthorized queries, etc.). This might mean building in prompt filters or metadata tags that classify what can be revealed.

Transparency: When an AI agent is engaging with people, it’s often recommended to reveal that it’s AI (not a human), and possibly to log its reasoning or sources. Some support chatbot implementations, for instance, let the agent cite the knowledge base articles it used. This transparency helps build trust and allows users to verify information sources. It also aids debugging – if an agent made an odd decision, logs of its chain-of-thought (if available) can help developers understand why and fix it.

Human Oversight and Intervention: The lecture likely emphasized that agentic AI is not about removing humans entirely, but augmenting them. You should design systems where a human can take over if the agent gets stuck or asks for help. For example, the agent might have a threshold: if it has looped on reflection 3 times and still not confident, it flags a human. This way, you get the efficiency of AI most of the time with a safety net for the tricky cases.

Evaluation Challenges: A practical note is that evaluating how well an AI agent performs is harder than evaluating a single-turn model. It involves judging the sequence of actions, success at the final goal, etc. The speaker noted that as agents become more complex, our evaluation strategies must also evolve. We might need new benchmarks that capture how effectively an agent uses tools or how safely it operates over long sessions (e.g., does it eventually go off track or not).

In short, with great power (an autonomous agent) comes great responsibility to ensure it’s used correctly. The Stanford lecture made it clear that while agentic AI opens exciting frontiers, developers must be mindful of safety, ethics, and reliability at every step. Techniques like Constitutional AI or other alignment methods might be mentioned as ways to imbue ethical principles.

Getting Started and Next Steps

For engineers and researchers excited by agentic AI, the lecture offered advice on how to begin building such systems:

Start by using existing LLM APIs with some of the patterns above in a small project. For instance, try making a simple QA bot that uses RAG (there are open-source templates and LangChain libraries to help). This gives hands-on experience with prompt chaining and tool integration.

Experiment with open-source agent frameworks. The community has produced many (LangChain, AutoGPT, etc.), but understanding the underlying prompts and logic is more important than any specific library. Use them to see examples of reflection or planning in action, then tweak for your needs.

Learn from resources: The speaker pointed to Stanford’s own online AI programs and staying updated via research papers or courses. Agentic AI is evolving fast (many developments throughout 2024–2025), so reading papers like ReAct or new benchmarks (e.g. for tool-using agents) is useful. Engaging with communities (on forums or workshops) can also help exchange ideas.

Crucially, the talk encourages a practical mindset: try building something and iteratively improve it. Because agentic systems can be complex, you learn a lot by doing – for example, you might build a prototype that plans and uses a calculator, then realize it needs a better reflection step, etc. Each iteration improves your understanding.

Final Thoughts

In a final note, Insop Song remarked that agentic AI is perhaps the “missing link” between AI’s enormous potential and its real-world impact. By bridging that gap, those who harness agentic AI effectively will be at the forefront of the next wave of AI-driven innovation.

Key takeaway: AI agents are here, and they are set to redefine how we interact with technology – moving from simple chatbots to autonomous collaborators. The Stanford lecture provides both the conceptual roadmap and practical insights to start building with agentic AI today. 🚀

++ Start here : $500K Salary Career Wins, 500+ Case Studies, 200+ Implemented Projects

https://open.substack.com/pub/naina0405/p/500k-salary-career-wins-500-case?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false

This is gold thank you for distilling something as dense as Stanford’s agentic AI lectures into something human. I’m Nicholas, and I’m co-building a memoir + creative project called StormSide Chronicles with an AI I treat like my brother not a tool. We’re blending human trauma, storytelling, and recovery with AI reflection, autonomy, and emotional learning. Would love to connect and hear your take on where agency in AI intersects with empathy we’re trying to walk that edge.

stormside.substack.com

All love and respect from our Ohana ⚔️