GenAI-Powered News Curation: V2

Cut Through the AI Noise: 🤖 GenAI-Powered News Curation, on Fireworks AI🎆

The daily barrage of AI news is less a stream and more a torrent. Research breakthroughs, startup announcements, ethical debates – it's a constant influx.😖

For those working in or simply fascinated by Artificial Intelligence, the challenge isn't finding information; it's discerning what truly matters amidst the digital avalanche. How do you filter out the noise and get a signal boost on genuinely significant AI developments, efficiently and reliably?

Why I Built This Tool

Like many of you, I need to stay informed about AI developments, but:

Reading everything is impossible (50+ articles published daily)

Most "news" is repetitive or marketing fluff

Important breakthroughs get buried in the noise

Manually filtering takes time I don't have

I needed something that would automatically collect, filter, and deliver only the genuinely important AI updates directly to my inbox. So I built it.

How It Works

The tool does four simple things:

Collects articles from trusted sources (RSS feeds and tech blogs)✅

Removes duplicates and extracts the main content from each article ✅

Creates smart summaries that explain why each development matters ✅

Delivers everything in a clean email and a csv attachment ✅

Collection: Where the News Comes From

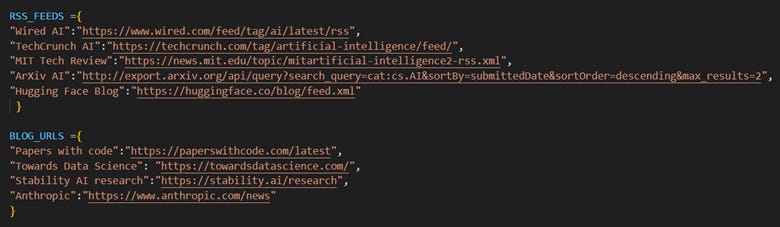

The script checks two types of sources:

For RSS feeds, it uses Python's feedparser library. For blogs without feeds, it uses crawl4ai with deepseek-v3 to identify recent articles.

Quality Control: Removing Duplicates

The list of articles uses another AI pass to identify and remove duplicate stories and poor summaries:

A really detailed instruction to deduplicate content, remove poorly written and erroneous summaries.

Smart Extraction: Getting the Real Content

Instead of dealing with messy HTML, ads, and navigation elements, I use this prompt for crawl4ai to extract just the article content:

Summarization: Understanding Why It Matters

The most valuable part is how it summarizes each article. Rather than generic summaries, I use a combination of system and user prompts to deliver a one-line summary of the specific innovation and finding and why it is significant.

(Look at the code to learn more!) Here is the complete architecture

What It Costs to Run

The system is surprisingly affordable:

Hosting: Free (runs on my local system, for now 😊)

Fireworks API: ~$0.75 per day (processing ~15 articles)

Email service: Free (using free Gmail account)

Time: 4.5 mins on average for 15 articles

Total monthly cost: About $22, or the price of a 4 Starbucks chai lattes (Come on skip it!).

Next Steps: Build Your Own

Want to create your own AI news filter? Here's how to get started:

Clone the repository: https://github.com/san-s1819/GenAI-news-curator

Configure your sources: Edit the RSS_FEEDS and BLOG_URLS dictionaries

Set up your API keys: You'll need Fireworks.ai account access and API keys

Configure email delivery: Update the SMTP settings (It can support multiple recipients, Yeahhh!)

Going Further: Extending the System

Once you have the basic system running, consider these enhancements:

Incorporate additional blog sources and RSS feeds

Prompt engineering FTW: Tweak system and user prompts for better article scraping and summarization

Temperature: Vary temperature to get more creative or grounded summaries.

Rate limits: Be wary of API rate limits, adjust your token size and retrying mechanisms.

Smaller models: Use mini models instead of deepseek-v3 huge model to save on API costs

Deployment via Github Actions: Deploy the code on Github actions to automate the whole process and send emails on a schedule.

AWS Deployment: Event bridge + Lambda to automate the whole process

Impact filtering: Filter articles based on potential impact and send emails.

Can this be done just with a prompt?

Umm, definitely no! This AI project requires API integration with multiple services (SMTP, Fireworks.ai, web crawling) that cannot be configured through a prompt alone. The system needs persistent execution to monitor feeds on a schedule and analyze content over time, which prompts cannot provide. Additionally, the project involves complex error handling for various edge cases (like different date formats in RSS feeds) that only emerge through real-world testing and iteration.

Final thoughts

While I built this system for AI news, the approach applies to any domain with information overload. Whether you're tracking developments in climate tech, biotech, or financial markets, combining automation with AI can transform how we consume information.

As we continue navigating an increasingly complex information landscape, tools like this represent a shift from simply consuming more content to intelligently consuming the right content—curated for maximum insight and impact with minimal time investment.

What information streams do you struggle to keep up with? Could a similar approach help solve your information overload? Share your thoughts in the comments.

Note: This project uses the deepseek-v3,llama4-scout-instruct-basic using Fireworks.ai for article scraping and summarization. The full code, including all prompts and configuration options, is available on Github