This Week in AI - Week of 2nd March

This week, AI took significant strides with major model launches, innovative features, and strategic moves by leading companies. Here's a concise roundup of the most impactful developments.

OpenAI Unveils GPT-4.5 with Enhanced Accuracy

Here's What You Need to Know:

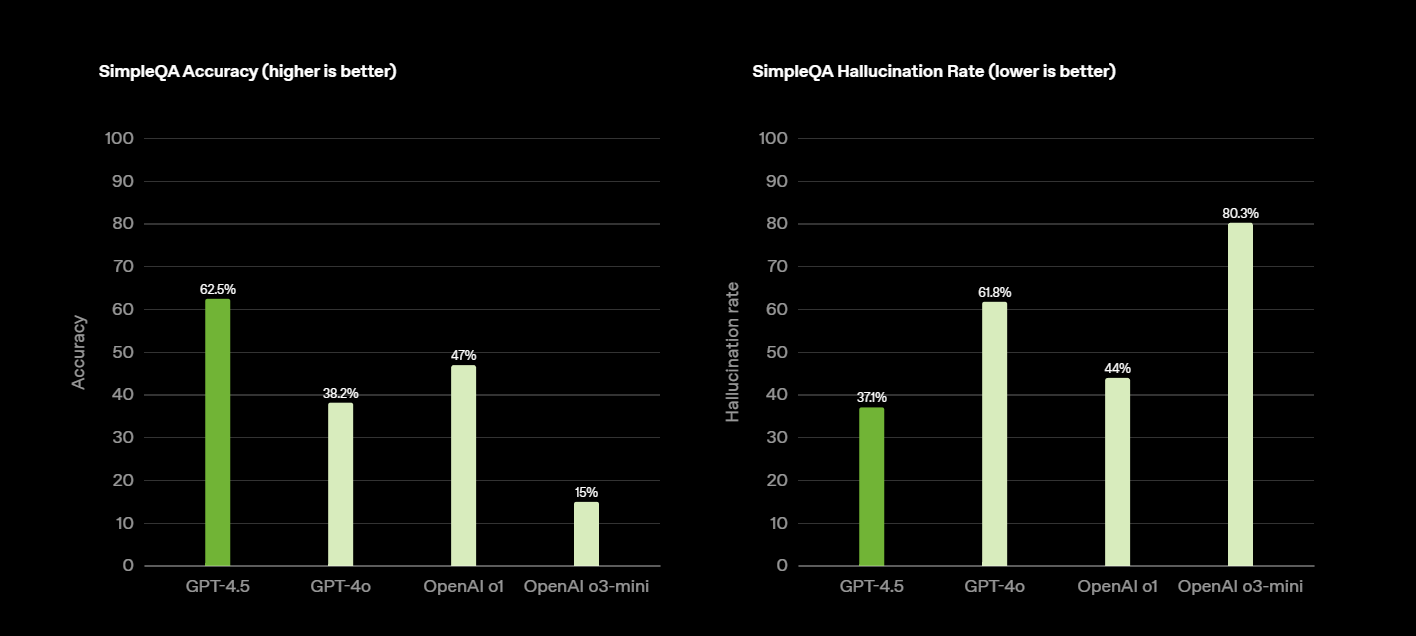

OpenAI has released GPT-4.5, an advanced iteration of its AI language model, now available to ChatGPT Pro users. This version boasts a substantial reduction in "hallucinations," decreasing from 61.8% to 37.1%, leading to more precise and reliable responses. With improved context understanding and refined knowledge recall, GPT-4.5 sets a new benchmark for large language models in balancing creativity with factual accuracy.

Why It’s Important for AI Professionals:

The improved accuracy of GPT-4.5 enhances the reliability of AI-generated content, benefiting applications in writing, programming, data analysis, customer support, and even scientific research. This advancement allows AI practitioners to develop more dependable tools and services, improving user trust and making AI integration more viable across industries.

Why It Matters for Everyone Else:

Users can expect more trustworthy interactions with AI applications, reducing misinformation and enhancing user experience across various platforms. From smarter virtual assistants to more reliable creative tools, everyday users will benefit from AI that better understands intent and delivers relevant, factual responses.

Aish’s Prediction:

GPT 4.5 took a while to come after their previous foundational model in the series after GPT 4. While OpenAI has not revealed the model size, it seems to be very large, just based on the API cost they are serving it at. It is 30x-50x more expensive than the o1 model, and currently only rolled out for Pro users (200$/month plan). I feel they might push out some smaller distilled version of the GPT 4.5 model. Do note that this is not a reasoning model, and was not built for that! Do note that GPT 4.5 knowledge cut-off date of October 2023, which means it doesn’t have the most recent information. So be mindful of it when using the model.

Anthropic Introduces Claude 3.7: A Hybrid Reasoning AI Model

Here's What You Need to Know:

Anthropic has launched Claude 3.7 Sonnet, the first "hybrid reasoning" AI model capable of both quick responses and in-depth analysis. Users can adjust the model's reasoning depth, balancing intelligence with time and budget constraints, making it one of the most flexible models available for both commercial and research use.

Why It’s Important for AI Professionals:

Claude 3.7's flexibility allows AI developers to tailor the model's reasoning capabilities to specific tasks, optimizing performance and resource allocation. Whether used in rapid customer service interactions or deep scientific analysis, Claude 3.7 provides developers with an adaptable tool that evolves based on project needs.

Why It Matters for Everyone Else:

This development means more personalized and efficient AI interactions, as the model can adapt its responses based on user needs, enhancing overall user satisfaction. Businesses and individual users alike will enjoy a smoother experience with AI tools that adjust dynamically to complexity and urgency.

Aish’s Prediction:

While I personally am not a huge claude user, I use it half the times compared to ChatGPT, but the bigger differentiator I see using Claude is for better human-like writing. So if your work/ hobby involves anything to do with writing, you are going to love this new model update, and I highly recommend using Claude for it. Few of the bottlenecks that I still find with Claude is better performance for multi-modal data, searching the internet for recent information, instruction following is not the best (compared to ChatGPT). Over the last several updates, Anthropic hasn’t really prioritized these, which makes me feel that it isn’t a path they are following and the use-case they are targeting is more specific to writing.

Adobe Launches Firefly Video: AI-Powered Video Editing

Here's What You Need to Know:

Adobe has introduced Firefly Video, an AI-driven tool designed to revolutionize video editing by automating complex tasks, enhancing creative workflows, and introducing generative capabilities for scene creation and visual effects.

Why It’s Important for AI Professionals:

Firefly Video showcases the integration of AI in creative industries, providing AI professionals with opportunities to develop tools that augment human creativity and streamline production processes. The growing intersection of AI and creativity presents new avenues for product innovation, workflow optimization, and creative collaboration.

Why It Matters for Everyone Else:

Content creators and businesses can now produce high-quality videos more efficiently, reducing costs and time associated with traditional video editing methods. Even small businesses and individual creators gain access to professional-level video capabilities, lowering barriers to entry in digital content creation.

Aish’s Prediction:

I have used Adobe Firefly a ton of times for generating images, and I love the results better than DallE for specific styles (realistic, yet artsy). I am thrilled to see they are launching video capabilities into the tool. Ofc, majority of the creative space professional are loyal to using Adobe tools, and offering a richer ecosystem of tools is the natural move for Adobe.

Google Introduces Veo2 for YouTube Shorts

Here's What You Need to Know:

Google has unveiled Veo2, an AI tool specifically designed to enhance YouTube Shorts by providing creators with advanced editing features, personalized recommendations, and intelligent tagging for improved discoverability.

Why It’s Important for AI Professionals:

Veo2 exemplifies the application of AI in optimizing short-form video content, offering AI professionals insights into user engagement, content personalization, and recommendation systems. The tool’s analytics capabilities offer a goldmine of data for understanding what resonates with audiences.

Why It Matters for Everyone Else:

Creators can leverage Veo2 to produce more engaging and tailored content, potentially increasing viewer retention and satisfaction on YouTube Shorts. Viewers will also benefit from more relevant and personalized content recommendations, enhancing their overall platform experience.

Aish’s Prediction:

I love this step of Google to built Veo2 not just as their answer to GenAI video models, but also to directly integrate it with one of their most used products- Youtube. The creator economy is booming and I believe still not reached the maximum potential, so having these tools accessible for creators to be more efficient is brilliant!

Amazon Launches Alexa+: The Next-Gen Voice Assistant

Here's What You Need to Know:

Amazon has rolled out Alexa+, an upgraded version of its voice assistant, featuring enhanced natural language understanding, improved conversational memory, and expanded integration with smart home devices, creating a more holistic and intelligent home assistant.

Why It’s Important for AI Professionals:

Alexa+ demonstrates advancements in conversational AI and the seamless integration of AI with IoT devices, providing a richer development platform for AI professionals. It opens new possibilities for developers to build adaptive, cross-device applications that leverage Alexa+’s contextual awareness.

Why It Matters for Everyone Else:

Users can enjoy more intuitive interactions with their smart home ecosystems, making daily tasks more convenient and enhancing the overall user experience. With better memory and context awareness, Alexa+ can follow multi-step conversations and anticipate user needs more effectively.

Aish’s Prediction:

Let me start with - great pricing! Offering an advanced voice assistant under 20$ is going to make it super accessible to everyone who uses Amazon Echo and other smart home tools. Their integrations have always been good, but the virtual assistant wasn’t the best, I am hoping with this update, all the “I didn’t get that” incidents will be much lower. Based on the launch specifications, Alexa+ is not just an AI-powered voice assistant, it is agentic too. They have integrated it with several apps, so you can make it “do” things for you, like ordering groceries or making reservations.

DeepSeek Open Source Week: Introducing FlashMLA and DeepEP

Here's What You Need to Know:

DeepSeek has announced FlashMLA and DeepEP during its Open Source Week, aiming to accelerate machine learning workflows, enhance data processing capabilities, and lower the technical barriers for data scientists and machine learning engineers.

FlashMLA is an efficient Multi-head Latent Attention (MLA) decoding kernel optimized for NVIDIA Hopper GPUs, particularly the H800 model

DeepEP is a specialized communication library designed for Mixture-of-Experts (MoE) models and expert parallelism (EP)

Why It’s Important for AI Professionals:

DeepSeek’s FlashMLA and DeepEP offer faster training, better memory efficiency, and optimized scaling — especially for large language models and Mixture-of-Experts (MoE) architectures. They’re designed to maximize high-end GPUs like NVIDIA H800, enabling larger models, faster inference, and smarter resource distribution across GPUs. However, they’re hardware-intensive and come with a learning curve, favoring well-resourced teams. These tools unlock new model designs and expand what’s possible in NLP and generative AI — but only if you have the compute to match.

Why It Matters for Everyone Else:

The release of FlashMLA and DeepEP can lead to faster development of AI applications, resulting in more efficient services and products for consumers. The optimizations allow for even larger AI models, which could result in more capable and knowledgeable AI assistants in the future. As these technologies are adopted, you might notice improvements in various AI-powered services, from chatbots to content generation tools.

Aish’s Prediction:

DeepSeek's commitment to open-source development will likely inspire other companies to contribute, accelerating technological advancements and democratizing access to powerful AI tools. They have been for-sure making giants like OpenAI, Anthropic, and other proprietary model providers a run for their buck! I am seeing a LOT of developers switching to DeepSeek models, and with more optimizations released by DeepSeek, we will just see a lot of these experimentations going to production.

AI continues to evolve rapidly, bringing forth innovations that reshape industries and daily life. Let's stay informed and proactive in harnessing these technologies responsibly to build a better future.

Thank you for reading "AI with Aish - Week in AI." Stay curious, stay informed, and see you next week!

PS: This newsletter is AI-generated every week that fetches the more recent news based on a detailed set of prompts that Aish provides. Once the newsletter is ready, Aish adds her thoughts and predictions personally to each of the news pieces.