This Week in AI: Week of June 1st 2025

This week was a whirlwind in the AI space, with major players launching new tools and platforms, significant acquisitions shaking up the data landscape, and ongoing debates about AI's societal impact heating up. From your phone's offline AI capabilities to the very definition of entry-level jobs, AI is evolving fast. Let’s dive in!

OpenAI Ups the Ante: o3 Operator Boosts Performance & Safety, Academy Opens Doors to AI Literacy

Here’s What You Need to Know:

OpenAI has rolled out the o3 Operator, an upgraded AI model designed for enhanced performance and safer computer interactions. Simultaneously, they've launched the OpenAI Academy, a free, self-paced learning platform aimed at helping everyone understand and utilize AI more effectively.

Why It’s Important for AI Professionals:

The o3 Operator likely translates to more sophisticated APIs and potentially new frameworks for developers, emphasizing safer and more capable AI agent interactions. For researchers, it presents a new benchmark for complex task automation and safety protocols. While the Academy targets a broad audience, it can be a valuable resource for upskilling non-technical team members or for foundational learning.

Why It Matters for Everyone Else:

A more advanced AI like the o3 Operator could mean more intuitive digital assistants and smarter automation in everyday software, making our tech interactions smoother. The OpenAI Academy is a fantastic step towards demystifying AI, empowering more people to understand a technology that's increasingly shaping our daily lives and future.

Aish’s Prediction:

The o3 Operator sounds like OpenAI is seriously pushing towards AI agents that can reliably do things on our computers, not just chat. We're still a ways from 'Her,' but it's a solid step.

As for the Academy, I’m thrilled! I’ve always said democratizing AI knowledge is crucial. I predict a significant uptick in general AI understanding, which hopefully translates into more diverse and ethically-minded AI applications.

In the next 6-12 months, I am positive we’ll see wave of startups leveraging these advanced agent capabilities for hyper-specific productivity tools. Think ultra-personalized workflows.

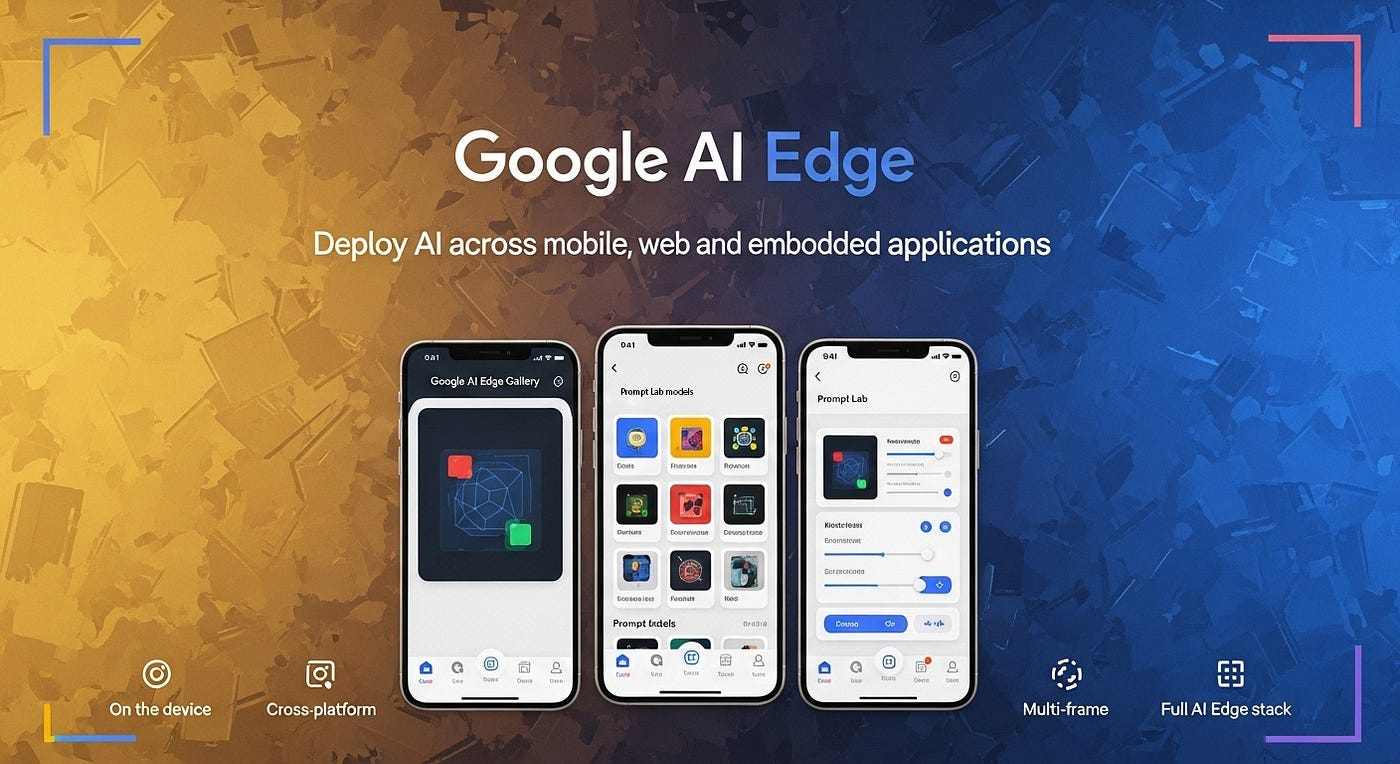

Google Goes Offline: New AI Edge Gallery App Puts Hugging Face Models Directly on Your Phone

Here’s What You Need to Know:

Google has quietly released the AI Edge Gallery app for Android (iOS version coming soon). This app allows users to find, download, and run a variety of open-source AI models from Hugging Face directly on their phones, even without an internet connection, for tasks like image generation, Q&A, and coding.

Why It’s Important for AI Professionals:

This move is a significant nod to on-device AI, enabling developers to experiment with and deploy models locally, bypassing cloud dependency for certain tasks. It opens up avenues for privacy-centric AI applications and pushes the boundaries of edge computing. Engineers will need to hone their skills in model optimization (think quantization and pruning) to make powerful models work efficiently on mobile hardware.

Why It Matters for Everyone Else:

Imagine powerful AI tools that work on your phone without needing Wi-Fi or sending your personal data to the cloud. This means faster responses for some AI features, enhanced privacy, and AI that’s always available – like having a super-smart assistant in your pocket, even when you're off-grid.

Aish’s Prediction:

Google is making it easy to run Hugging Face models on your phone, offline? That's a big win for on-device AI and privacy. Your data stays with you, which is how it should be for many applications. My bet is that within 12 months, we’ll see a surge in innovative apps with cool, privacy-first AI features because of this. It also definitely turns up the heat on Apple to innovate further in their on-device AI strategy.

PS: If you are keen about on-edge ML, you should know about Decompute, who are optimizing to run ML models on CPU. Transparently, I am an investor in the company.

Hugging Face Steps into the Physical World: Unleashes Open Source Humanoid Robots

Here’s What You Need to Know:

AI development platform Hugging Face has ventured into robotics by releasing two open-source humanoid robots: HopeJR, a full-size robot with 66 degrees of freedom capable of walking, and Reachy Mini, a smaller desktop unit for testing AI applications.

Why It’s Important for AI Professionals:

This signals Hugging Face's serious commitment to robotics, offering open hardware and software that could significantly accelerate research in embodied AI and reinforcement learning in physical systems. It allows AI developers to more easily test their models on actual robots, helping to bridge the often challenging simulation-to-reality gap.

Why It Matters for Everyone Else:

Open-sourcing robot designs can democratize robotics research, potentially leading to faster innovation and lower costs. This could make advanced robots more accessible for educational purposes, research labs, and eventually, for assistance in various settings. While we're not expecting robot butlers tomorrow, this fosters a community approach to building our robotic future.

Aish’s Prediction:

Hugging Face diving into open-source robotics hardware dramatically lowers the barrier to entry for serious robotics research, just like they did for AI models. I predict that in the next 12-18 months, robotics development cycles will be a bit longer than pure software, and we'll see an explosion of creative robotics projects built on these platforms. Don't expect a C-3PO in your kitchen next year, but do expect robotics research to get a massive boost.

Keep reading with a 7-day free trial

Subscribe to AI with Aish to keep reading this post and get 7 days of free access to the full post archives.